I have long been frustrated by the inability to download multiple files from SharePoint as a zip file via code, in the same way it’s possible through the user interface. When this suddenly became a requirement from a customer , I had to come up with a solution 💡. Naturally, the solution needed to be robust 💪 — I could use the endpoint that SharePoint itself utilizes, though it’s not officially documented, but I decided against taking that route 🚫.

What was the solution then? 🤔

The solution came to me 🤔 while setting up the App Service Plan for my function—it struck me that I had 250GB of storage (App Service Premium Plan) available. I figured I could use this for something.

I was initially unsure whether I had permissions to write to this storage, but after a quick test, I confirmed that I could easily write to it—and, of course, read from it again. 🚀

Whether it’s the best solution to the problem, I’m not sure 🤷♂️, but I know it works, and I have full control over it, ensuring it won’t suddenly disappear—unlike an unofficial endpoint might. 💡

Fetch the files 📥

When fetching the files, it’s, of course, important to minimize the number of calls to avoid throttling. The way I’ve attempted to prevent this is by using Graph batching, which allows me to bundle 20 requests into one. 📦

Example class

public class FileInfoDTO {

public string? FileName { get; set; }

public string? RelativePath { get; set; }

}

Graph batching

internal async Task DownloadFilesFromPathsToTempFolderAsync(

List<FileInfoDTO?>? fileInfo,

string tempFolderPath,

Site siteInfo,

string? driveId) {

IEnumerable<FileInfoDTO?[]> chucked = fileInfo!.Chunk(20);

foreach (FileInfoDTO?[] chunk in chucked) {

await DownloadFilesFromPathsToTempFolderAsync(chunk, tempFolderPath, siteInfo, driveId);

}

}

private async Task DownloadFilesFromPathsToTempFolderAsync(

FileInfoDTO?[] fileInfos,

string tempFolderPath,

Site siteInfo,

string? driveId) {

using BatchRequestContent batchRequestContent = new BatchRequestContent();

Dictionary<string, string> nameMapping = new Dictionary<string, string>(fileInfos.Length);

foreach (FileInfoDTO? fileInfo in fileInfos) {

string requestId = batchRequestContent.AddBatchRequestStep(

GraphClient.Sites[siteInfo.Id]

.Drives[driveId]

.Root

.ItemWithPath(fileInfo.RelativePath)

.Content.Request());

nameMapping[requestId] = fileInfo.FileName!;

}

BatchResponseContent batchResponse = await GraphClient.Batch.Request().PostAsync(batchRequestContent);

Dictionary<string, HttpResponseMessage> responses = await batchResponse.GetResponsesAsync();

foreach ((string requestId, HttpResponseMessage response) in responses) {

if (!response.IsSuccessStatusCode && response.StatusCode != HttpStatusCode.Redirect) {

throw new Exception($"Error while getting file content: {response.ReasonPhrase}");

}

string filePath = Path.Combine(tempFolderPath, nameMapping[requestId]);

if (response.StatusCode == HttpStatusCode.Redirect) {

await DownloadFileFromRedirectAsync(response.Headers.Location, filePath);

} else {

await WriteContentToFileAsync(response.Content, filePath);

}

response.Content.Dispose();

}

}

You should be aware that the StatusCode returned may not always be 200 and can still be valid — many of my requests, for example, returned with a Redirect. 🔄 As a result, I had to implement handling for that as well.

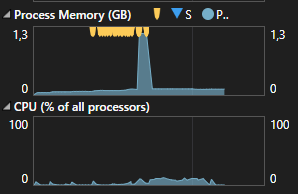

When I finally managed to get all my requests working to fetch the files, the next problem arose… How could I download the files and save them to my tempFolderPath without loading all the files into memory?

If I did, I’d quickly run out of Memory. The solution to this turned out to be the following:

Example class

private async Task DownloadFileFromRedirectAsync(Uri? redirectUri, string filePath) {

using HttpResponseMessage redirectResponse =

await GraphClient.HttpProvider.SendAsync(

new HttpRequestMessage(HttpMethod.Get, redirectUri));

redirectResponse.EnsureSuccessStatusCode();

await WriteContentToFileAsync(redirectResponse.Content, filePath);

}

private async Task WriteContentToFileAsync(HttpContent content, string filePath) {

await using FileStream fileStream =

new FileStream(

filePath,

FileMode.Create,

FileAccess.Write,

FileShare.None,

4096,

true);

await using Stream contentStream =

await content.ReadAsStreamAsync();

await contentStream.CopyToAsync(fileStream);

}

By using using statements and ReadAsStream, each file only resides in Memory for a very short time before it is disposed. ♻️💡

Example of a large file

Return the ZIP file 🚀

So how did I implement it all in an endpoint? I did it as follows, and it even works locally on my PC, allowing me to test it easily. To avoid using too much Memory again, I return the ZIP file as a FileStreamResult. 🚀🗂️

[Function("DownloadFilesFromSharepoint")]

public async Task<IActionResult> DownloadFilesFromSharepoint(

[HttpTrigger(AuthorizationLevel.Anonymous, "post", Route = "files/download")] HttpRequest req) {

System.Guid guid = System.Guid.NewGuid();

string tempFolderPath = System.IO.Path.Combine(System.IO.Path.GetTempPath(), guid.ToString());

string zipFilePath = System.IO.Path.Combine(System.IO.Path.GetTempPath(), $"{guid}.zip");

_logger.LogInformation($"Zip file path: {zipFilePath}");

List<FileInfoDTO?>? fileInfo = await ...;

if (!System.IO.Directory.Exists(tempFolderPath)) {

System.IO.Directory.CreateDirectory(tempFolderPath);

}

await DownloadFilesFromPathsToTempFolderAsync(fileInfo, tempFolderPath);

System.IO.Compression.ZipFile.CreateFromDirectory(tempFolderPath, zipFilePath);

System.IO.Directory.Delete(tempFolderPath, true);

return new FileStreamResult(new FileStream(zipFilePath, FileMode.Open), "application/zip") {

FileDownloadName = "files.zip",

EnableRangeProcessing = true,

};

}

However, there’s one thing I haven’t yet found the perfect solution for—namely, deleting my ZIP files. 🗑️

Since I return them as a FileStreamResult and don’t load them into Memory as a byte[], I can’t delete the files immediately. Instead, I handle this with a timer job afterward, which I don’t think is the best solution. 🤷♂️⏳

But again, it solved the customer’s problem, and they’re happy 😊, so I’m not planning to do much more about it. 🚀

TL;DR

This post explores how to programmatically download multiple files from SharePoint as a zip file using C# and Microsoft Graph API. 🚀

Key takeaways:

- Storage Solution: Leveraged 250GB of App Service Premium storage to temporarily store files.

- Efficient API Usage: Minimized Graph API calls using batching to avoid throttling (bundling 20 requests into one).

- Memory Optimization: Used

ReadAsStreamandFileStreamResultto handle files efficiently without overloading RAM. - ZIP File Handling: Created a ZIP file from the downloaded files and returned it via a streaming endpoint.

- Cleanup Challenge: Deleting ZIP files after returning them remains unresolved, currently handled via a timer job.

It’s not perfect, but it works, and most importantly, it solved the customer’s problem. 😊